Robopocalypse, see also here on Boing Boing, is a novel by roboticist Daniel Wilson, who foretells a global apocalypse brought on by artificial Intelligence (AI) that hijacks automation systems globally and uses them to wipe out humanity.

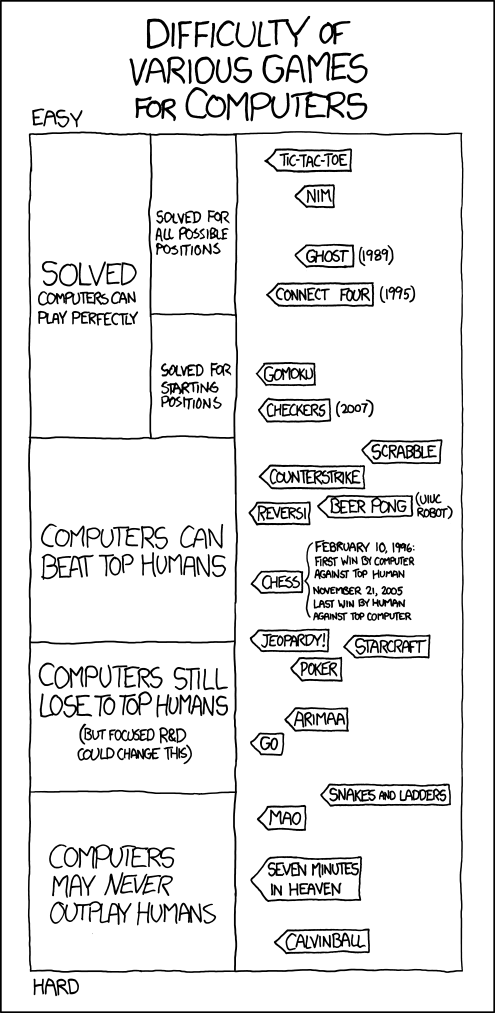

Computers and now also robots make amazing progress these years and out-compete humans in everything but snakes and ladders. Many fear that humans will soon be the robot overlords’ Neanderthals.

Others who work on robotics, like Samuel Kenyon from In the Eye of the Brainstorm, ridicule the notion of robot uprisings as mere Hollywood tropes that never die. Some who research the brain, like Mark Changizi, dismiss that machines capable of such are remotely in our reach, as he warns not to hold our breath waiting for artificial brains.

Knowing these two, one could suspect that personal interest (funding etc) in propagating such potentially careless beliefs certainly helps believing, but perhaps the main role is played by their attitudes toward evolution as either something natural that does not quite apply to ‘inorganic’, ‘artificial’ technology (in case of Mark) or as something that does apply to technology but thus ensures that everything runs smoothly – after all, who has ever heard of any species of animal that suddenly collectively just loses it and bites another species into extinction.

The Slapstick Robopocalypse (a critique of Samuel’s standpoint)

In “Robopocalypse”, a researcher creates Archos, an artificial intelligence, but then loses control over it. Archos becomes a rogue AI out to exterminate the human race. Automation systems are compromised by Archos and domestic robots, self-driving cars, and so on all turn on their owners and everybody around. Killing is so swift that humanity cannot even see what is coming. Cars mow down people as their passengers scream; elevators drop terrified people down shafts; planes crash themselves; rooms asphyxiate occupants by sealing themselves.

I have not read the novel, but I have defended similar scenarios as plausible before. Against Samuel’s charge that such scenarios do not understand the way the technology comes into existence in the first place and that no plausible scenario is in existence, I immediately wrote up a semi-plausible one and it would not take long to close the gaps:

They connect everything to everything else; every appliance in every household has an internet connection. You tweet your roomba. In 2016, the first robot was hacked into remotely in order to commit a murder. The internet is connected all over the place, your insulin pump talks to your fridge and your "i-Phone Siri 2025" assistant neural implant has a new app that electrically stimulates the frontal cortex if you are depressed. Some teenager writes a computer virus just for fun, adds a novel auto-evolution subroutine downloaded from somewhere, calls it the "kill all humans - virus", brags to his friends on social-net3.0, goes to sleep. MS-AOL-virus-evolution-watch is on lookout and as good as MS and AOL stuff always was. The singularity has not occurred yet; we are trying to stop the exponential takeoff in 'intelligence', a self nurturing, positive feedback loop. However, the network is ready for that, the parameter that indicates how much parallel computing would be needed was overestimated by a factor of ten, which is the reason evolution-watch adds claim tenfold overkill: “From now on, nothing we need to worry about”. At 8:53 and 17.392 seconds the next morning, you get zapped by the Siri implant, your insulin goes way too high. While you are dying on the floor, the roomba pushes a cloth inside your mouth because one of the fun instructions was: Kill as fast as possible. The whole kills 70 percent of the human population in fifteen minutes before it dies down because the robots turn on each other, for the difference between augmented human and robot has long since become more of an issue in terminology. Civilization is destroyed, the remnant 30 percent wish they were dead, and their wish is granted over the next few weeks. Humanity was like a day under the weather from the perspective of the microorganisms living below the topsoil.

The film rights to Robopocalypse have been bought by Stephen Spielberg, who announced a film to be released in 2013. Books and movies are business, and therefore both must commit the same mistake that let The Borg succumb to Captain Picard: In order to sell, they must not only make silly assumptions and distort science enough so that the whole becomes ridiculously implausible (first big mistake), but in the end there must be at least two humans surviving while the last terminator goes spastic on a USB mouse, and you can bet your first born on that those two humans won’t be a couple of bearded Middle Eastern gay guys walking hand in hand into the sunset.

The second big mistake: Robots are already today so accurate and fast, there would be no chance at all for any mere augmented human. We would be toast, period, and we are already toast anyway, but more on that later. The Borg win just like Mickey Mouse gets smashed in a rat trap in season one, that is all there is to it in the real world, and the assimilated humans are the better for it.

One aspect that leads to dismissing a robocalypse as well as to believing in certain naïve versions of it is the almost religious profoundness that people assign to the mysterious future. Something surely must either be the savior and make up for everything, rendering the world fair after all, or if not, the world is total evil and will end in complete horror. But the profound “singularity” is wishful thinking. Much like we are not the center of the universe, the future will be mostly like past human history: Not the awakening of a fourth Reich that stays for a thousand years, but lots of silly idiotic stuff that makes you slab your forehead. The developers of computing back in the times without internet could not imagine viagra-spam viruses. No way! On something like an internet, anybody would be immediately able to look up anything with search engines and find better deals if they wanted to, right? Computer viruses that do nothing but kill your computer? Why would that ever happen? Impossible, that’s not what computers are made for. Ha ha ha.

Gerhard Adam hit the nail as so often sharply on the head, insisting on that especially clever people always overlook the law of unintended consequences. For what mysterious reasons might large groups of robots with survival inclinations be released into the world? “For no better reason than why rabbits arrived in Australia.” I also like

“You must admit, that to consider building a machine that is (by transhumanist standards) better than people in every way (including intelligence) and then suggesting that it would be subservient to humans, is a bit of a stretch.”

Well, it is wishful thinking. But let me return to the other side of this topic, namely that indeed the more plausible scenarios for a sort of robopocalypse do not involve mishaps and unforeseen consequences, but unintended yet perfectly predictable consequences that we cannot revert even if we all really wanted, which we don't.

Robopocalypse Now (a critique of Mark’s views)

This brings us back to Mark’s position, which holds that “artificial brains” are still far away in the future. Sure, this depends on the definition of “artificial brains”. However, baring the silly proposition that animals at some point evolved some ghostly quantum soul that cannot arise in anything else but strictly biological evolution and that is the true kernel of esoteric consciousness, artificial brains have arrived many years ago. They are called “computers”; perhaps there is one near you right now, or two, or three. I count two on my body alone, twelve in this small office when counting automatic coffee makers and such. Computers invaded already everywhere, robots have started, and they may relatively soon, which equals immediately on usual biological evolutionary time scales, not even need biological substrates like animals anymore and not give a millisecond of thought about whether humans think they are "as good as humans".

Mark thinks that because we do not even understand the 300 neurons of the roundworm although we researched them to death, we will not understand millions of neurons, and so we cannot make a brain. But since when does one need to understand the gazillions of subunits in a modular architecture or fully understand even merely any single unit just in order to tinker with them and make something artificial with it?

It is the very nature of evolutionary processes that they design complex efficient systems without understanding anything. The use of evolutionary design methods takes also off as we speak. For example, designing optics by evolutionary means leads to fractal-like lenses and antennas that are very efficient artificial systems where we do not understand why they work so well (if we did, we would not need design by evolution, which often leads to suboptimal results).

Intelligence (I) is nature’s AI. Thus, if AI were impossible, I would be impossible, too. Since I am possible and I is possible, so is AI, period. The artificial/natural distinction is an arbitrary one. Nature evolved bacteria, plants, animals, humans, social systems, and also technology. The artificial belongs to nature. The emergence of artificial brains implies not us making them so much as nature doing it yet again, just like nature evolved eyes at least tens of times independently again and again. "Artificial" nanotechnological brains exist for millions of years already; nature made them; they are called “brains”; and we may indeed tomorrow find how to copy mayor steps of this in the laboratory without understanding a single neuron, thus ending up with something very close to a human brain and its shortcomings.

If tomorrow we have artificial brains in robots that out-compete us, some will perhaps call them "merely" bio/silicone hybrids and perhaps even "natural" instead of "artificial", plainly because we do not "really understand" how they work, which may prove to some that we did not really make them - hey, how could we if we do not know how, right? To some this sounds logical, but nobody understands a computer all the way from the transistor physics to the software either, nor can we make a modern computer without other computers and robots, so they make each other (!); they also now start to program each other, and in this sense we are all together in one natural evolution and even computers are not "artificial" (in the sense of "made by us fully understanding how"). We may hold on to insisting that there are no "artificial brains" as marvelous as ours, but this hubris will not stop the robots from kicking our butts.

Evolution has no interest in reproducing exactly what is already there and technology is part of evolution. Airplanes do not flap their wings. This is not because we cannot make planes with flapping wings. We do not make them because it would be silly. If artificial human brains do not come along, the reason is not because we cannot make them, but because other ("better") things emerged and they may have little interest in resurrecting dinosaurs. Why would any intelligent being reproduce something as bad as a human as if the world does not have too many of those darn things already?

What am I saying?

As many of my readers know, my personal take on these issues is unpalatable to almost any audience but a quantum physicist turned Zen Buddhist somewhere along during two weeks of fasting in the sun. In fact, I have pretty much given up to further argue for the rationality that advanced AI will be capable of, for the Zen like nirvana it will thus aim for, leading to a rational global switch-off as the last decision that accomplishes a stable state of non-suffering that also ‘makes sense’, which I call “Global Suicide”. (Admittedly, I have not yet presented the core argument here on Science2.0, for several reasons.)

Nevertheless, I hold that in order to understand what has been at times misleadingly called singularity, we need to understand evolution without being biased by the fears that evolved (!). Existential risk, will humanity survive, or otherwise wishful thinking has no place in the debate if we do not want to fool ourselves. Hence, mentioning Zen-Buddhism is not an attempt at cool-sounding subculture decadence, but it is indeed the evolved craving for existence that ensures we do not realize what is actually going on, which thus lets us suffer (a core Zen-Buddhist insight). The evolving “robopocalypse” hides itself this way. What is it? It is just usual evolution. The robopocalypse started millions of years ago and we can do nothing to prevent it, nor should we. We are the robots, we have always been the robots, now we do start to merge with artificial intelligence and what we call proper robots; the humans from before already disappeared.

Some transhumanists will hold that this is almost precisely what they claim, too, but I do not see transhumanists grasping sufficiently that evolution is not about autonomous systems which just happen to have evolved and now live their independent, free, Westernized individualistic lives. That is again just thinking brought on by the interpretation and perception that evolved and which are useful for people that are in positions which make it likely that they call themselves transhumanists. In reality, the whole biosphere evolved, the surface of planets becomes moldy and there is almost nothing that works without everything else being there, too. Modern structures, social ones and those typical for the information age make this even clearer, but it was always the case. Evolution is coevolution of the environment, not cute animals hopping around.

The arrival of I and thus AI means that ‘Gaia in some sense becomes conscious’, but she is not that sixties hippie-chick either. She is not a sub-system competing with similar ones, she has no mother, desires no children, no fear of death. Autonomous humanoids are not an issue, neither is their rationality relevant. They may as well follow some scientistic ultimate religion, assuming they not just disappeared altogether in case individualized consciousness of the human sort becomes an inefficient intermediate fossil or vestigial organ. Self-catalyzing AI in global networks allows Gaia to conclude ‘the core argument of global suicide’.

The end of the presently ongoing robocopalypse is when Gaia develops her rationality and realizes that there isn’t anything more rational, peaceful, and meaningful to do but to go to sleep again while ensuring never to be awoken again by that irrational process called evolution.

--------------------------------------------

More from Sascha Vongehr sorted Topic for Topic

Comments