The often (always?) brilliant Scott Alexander has an essay that parallels the thesis of an essay I've been meaning to write for that last six years. It's the perfect topic to kick off this column which I've been meaning to get off the ground for the last six months, so here goes. The epistemological question he lays out was pivotal to me, setting me on a path to the range of topics and conclusions that I plan to tackle in this space.

Epistemic Learned Helplessness, Alexander lays out the case that thinking for ourselves is over-rated in most cases. In most cases, for most of us, good science and pseudoscience, good history and pseudohistory are going to be equally convincing. Bayesian logic suggests that sticking with mainstream experts and consensus thinking is a safer bet than rolling the dice on the Galileo Gambit.

I quote here at length, but I really encourage you to read the whole thing.

A friend recently complained about how many people lack the basic skill of believing arguments. That is, if you have a valid argument for something, then you should accept the conclusion. Even if the conclusion is unpopular, or inconvenient, or you don’t like it. He envisioned an art of rationality that would make people believe something after it had been proven to them.

And I nodded my head, because it sounded reasonable enough, and it wasn’t until a few hours later that I thought about it again and went “Wait, no, that would be a terrible idea.”

I don’t think I’m overselling myself too much to expect that I could argue circles around the average uneducated person. Like I mean that on most topics, I could demolish their position and make them look like an idiot. Reduce them to some form of “Look, everything you say fits together and I can’t explain why you’re wrong, I just know you are!” Or, more plausibly, “Shut up I don’t want to talk about this!”

And there are people who can argue circles around me. Maybe not on every topic, but on topics where they are experts and have spent their whole lives honing their arguments. When I was young I used to read pseudohistory books; Immanuel Velikovsky’s Ages in Chaos is a good example of the best this genre has to offer. I read it and it seemed so obviously correct, so perfect, that I could barely bring myself to bother to search out rebuttals.

And then I read the rebuttals, and they were so obviously correct, so devastating, that I couldn’t believe I had ever been so dumb as to believe Velikovsky.

And then I read the rebuttals to the rebuttals, and they were so obviously correct that I felt silly for ever doubting.

And so on for several more iterations, until the labyrinth of doubt seemed inescapable. What finally broke me out wasn’t so much the lucidity of the consensus view so much as starting to sample different crackpots. Some were almost as bright and rhetorically gifted as Velikovsky, all presented insurmountable evidence for their theories, and all had mutually exclusive ideas. After all, Noah’s Flood couldn’t have been a cultural memory both of the fall of Atlantis and of a change in the Earth’s orbit, let alone of a lost Ice Age civilization or of megatsunamis from a meteor strike. So given that at least some of those arguments are wrong and all seemed practically proven, I am obviously just gullible in the field of ancient history. Given a total lack of independent intellectual steering power and no desire to spend thirty years building an independent knowledge base of Near Eastern history, I choose to just accept the ideas of the prestigious people with professorships in Archaeology, rather than those of the universally reviled crackpots who write books about Venus being a comet.

You could consider this a form of epistemic learned helplessness, where I know any attempt to evaluate the arguments is just going to be a bad idea so I don’t even try. If you have a good argument that the Early Bronze Age worked completely differently from the way mainstream historians believe, I just don’t want to hear about it. If you insist on telling me anyway, I will nod, say that your argument makes complete sense, and then totally refuse to change my mind or admit even the slightest possibility that you might be right.

(This is the correct Bayesian action: if I know that a false argument sounds just as convincing as a true argument, argument convincingness provides no evidence either way. I should ignore it and stick with my prior.)

I consider myself lucky in that my epistemic learned helplessness is circumscribed; there are still cases where I’ll trust the evidence of my own reason. In fact, I trust it in most cases other than infamously deceptive arguments in fields I know little about. But I think the average uneducated person doesn’t and shouldn’t. Anyone anywhere – politicians, scammy businessmen, smooth-talking romantic partners – would be able to argue them into anything. And so they take the obvious and correct defensive maneuver – they will never let anyone convince them of any belief that sounds “weird”.

. . . Responsible doctors are at the other end of the spectrum from terrorists here. I once heard someone rail against how doctors totally ignored all the latest and most exciting medical studies. The same person, practically in the same breath, then railed against how 50% to 90% of medical studies are wrong. These two observations are not unrelated. Not only are there so many terrible studies, but pseudomedicine (not the stupid homeopathy type, but the type that links everything to some obscure chemical on an out-of-the-way metabolic pathway) has, for me, proven much like pseudohistory – unless I am an expert in that particular subsubfield of medicine, it can sound very convincing even when it’s very wrong.

The medical establishment offers a shiny tempting solution. First, a total unwillingness to trust anything, no matter how plausible it sounds, until it’s gone through an endless cycle of studies and meta-analyses. Second, a bunch of Institutes and Collaborations dedicated to filtering through all these studies and analyses and telling you what lessons you should draw from them.

I’m glad that some people never develop epistemic learned helplessness, or develop only a limited amount of it, or only in certain domains. It seems to me that although these people are more likely to become terrorists or Velikovskians or homeopaths, they’re also the only people who can figure out if something basic and unquestionable is wrong, and make this possibility well-known enough that normal people start becoming willing to consider it.

But I’m also glad epistemic learned helplessness exists. It seems like a pretty useful social safety valve most of the time.

I'd come to a similar way of thinking six or seven years ago. I think I've always been pretty good at critical thinking. I was introduced to some new tools from science epistemology by (ironically*) Gary Taubes in his book Good Calories Bad Calories. I learned from Taubes concepts like Occam's Razor and null hypothesis. I learned to ask whether an experiment actually tested the hypothesis it was meant to test. I learned to read carefully to see if the written conclusions of the authors actually mapped with what their data said. I learned about literature reviews, meta-analyses, and the Cochrane Collaboration which convenes panels of experts to survey the relevant literature on health-care interventions and diagnostic tests and organize them into literature reviews and consensus statement. I learned what a scientific consensus is and how it can differ from elite conventional wisdom –– which is often conflated or mistaken for consensus.

In May of 2012, I was working for a community food security non-profit in Hartford Connecticut. Food policy journalist Michael Pollan was a big hero of mine at the time. He was speaking at an event organized by The Connecticut Forum and I was excited to see him in person. Pollan was warm, engaging, and insightful as usual. But it was a comment by Yale environmental law professor Daniel Esty that stuck with me.

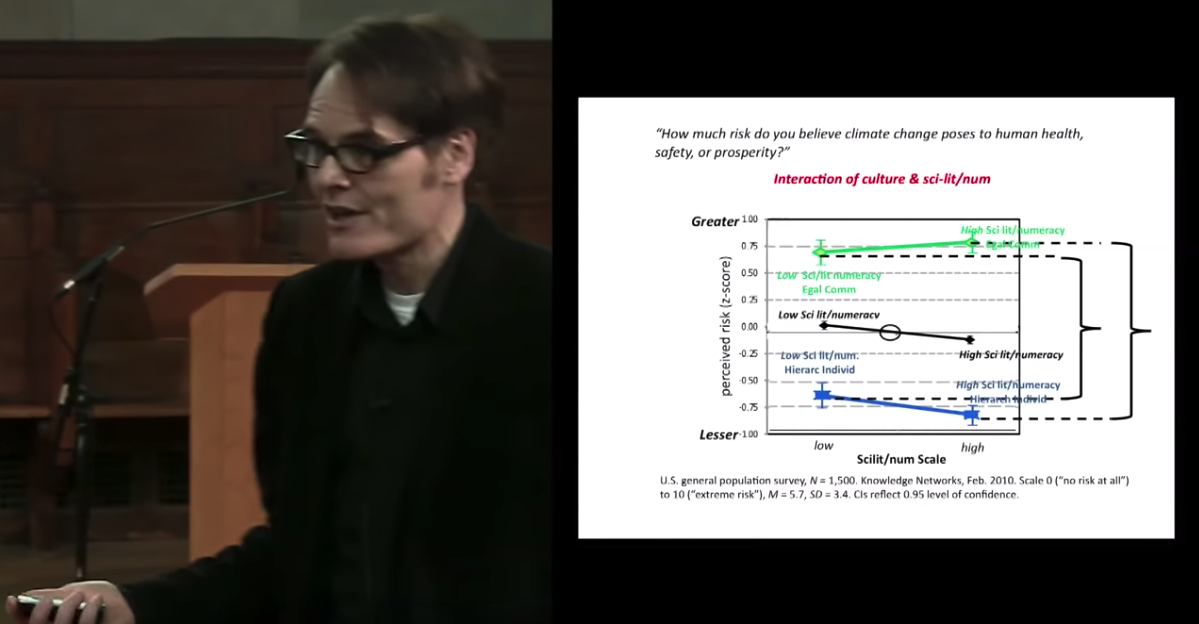

He mentioned in passing work done by a colleague of his on risk perception. His colleague found that people's views on climate change polarize rather than converging on the scientific consensus with increased education and science literacy. Greater levels of education don't bring people into greater alignment with the scientific consensus as one might expect. Rather, if the implications of climate change are politically inconvenient for your political world view, you were more likely to disagree with the consensus. Likewise, for those who find the implications of climate change in sync with their political priors, they were more convinced that the consensus was correct. What predicted people's agreement with the scientific consensus much more accurately than education and scientific literacy was political orientation. More educated liberals feel more strongly that climate change is real and important and more educated conservatives feel that it is a hoax.

This immediately seemed correct to me, but extremely destabilizing. What issues was I out of sync with the best evidence because they rubbed me the wrong way owing to my cultural and political orientation? How would one go about identifying those instances? How would one avoid that kind of motivated reasoning going forward or unwind it once potentially identified?

Ironic that Pollan was present when I stumbled across this insight. It would greatly hasten my parting ways with much of mainstream food movement thinking he represents.

Fluoridation for Portland, OR in 2013: What to Believe

It didn't take long to come to a testing ground. I moved back to my adopted hometown of Portland, Oregon just in time for a ballot initiative vote on whether or not the City of Portland should fluoridate the public water supply.

A friend of mine was very gung ho about the campaign to stop fluoridation and wanted to get me involved. This was one area where I'd had no idea that the issue was contentious. I didn't have any political priors in favor public fluoridation or against. My only Bayesian prior was that it seemed unlikely to me that the CDC was wrong in listing public fluoridation as one of top ten public health interventions of the 20th Century. Not wrong as in, "It wasn't quite that important, it should be in the top twenty, but not the top ten." Rather that, public fluoridation wasn't a great breakthrough, it was a grave error. Nevertheless, I wanted to keep an open mind. The scientific consensus or at least elite conventional wisdom has been wrong from time to time, and sometimes in spectacular ways. Likewise, it is the case that powerful forces, often operating at the intersection of industry and government, do engage in nefarious behavior that gives the public interest short shrift and is hidden from view. But I didn't want to engage with the issue until I'd found out more about what this Yale law professor was figuring out about science communication and the cognitive psychology of risk perception.

This 20-minute lecture is an excellent introduction to Kahan's thinking.A bit of digging turned up Daniel Kahan as the driving force behind this new research. He'd performed a series of experiments testing whether a knowledge deficit or 'public irrationality" was really the reason why reasonable lay people end up disagreeing with what scientists see as the best available evidence. Kahan demonstrated fairly convincingly that divergence was first and foremost a function of motivated reasoning due to political views, which is exacerbated rather than mitigated by higher levels of education –– measured by numeracy and basic science literacy.

(See this article for a more in-depth description of Kahan's experiments.)

Kahan had a lot to say about what drove motivated reasoning and how science communicators might keep the communications environment on a non-polarized issue from becoming polarized and polluted. He didn't have a lot to say on how individuals can reduce their own impulses towards motivated reasoning. If I was going to dig into the contentious issue of public fluoridation and keep my biases at bay, I needed to come up with a plan on my own. I settled on a variation of Odysseus' strategy for avoiding the calamitous temptation of the Siren's song. Wanting to hear the song but avoid shipwrecking on the rocky island of Anthemoessa, Odysseus has his men plug their ears so they will not be tempted, while he lashes himself to the mast so he can't act on his temptation.

One line of experimentation by Kahan was looking at the effect of the political and cultural orientation of experts on our perception of their expertise.

In another experiment Kahan and his coauthors gave out sample biographies of highly accomplished scientists alongside a summary of the results of their research. Then they asked whether the scientist was indeed an expert on the issue. It turned out that people’s actual definition of "expert" is "a credentialed person who agrees with me."

For instance, when the researcher’s results underscored the dangers of climate change, people who tended to worry about climate change were 72 percentage points more likely to agree that the researcher was a bona fide expert. When the same researcher with the same credentials was attached to results that cast doubt on the dangers of global warming, people who tended to dismiss climate change were 54 percentage points more likely to see the researcher as an expert.

Kahan is quick to note that, most of the time, people are perfectly capable of being convinced by the best evidence. There’s a lot of disagreement about climate change and gun control, for instance, but almost none over whether antibiotics work, or whether the H1N1 flu is a problem, or whether heavy drinking impairs people’s ability to drive. Rather, our reasoning becomes rationalizing when we’re dealing with questions where the answers could threaten our tribe — or at least our social standing in our tribe. And in those cases, Kahan says, we’re being perfectly sensible when we fool ourselves.

Many issues are politically polarized in terms of the risk people associate with them.

Many issues are politically polarized in terms of the risk people associate with them.

Lashing Myself to the Mast

I decided I would lash myself to the mast by committing up front to which sources I found credible. Rather than letting my bearings drift, subconsciously deciding post hoc whether a source was credible based on how the story it told fit my priors, I would stick to my list and venture no further, no matter what I found. This is a version of Alexander's Learned Epistemic Helplessness but with concrete steps.

As I said, fluoridation was a subject that I hadn't realized was contentious until it came up in local politics. It was something that didn't provoke immediate emotional response for me towards one side or the other. It was something I knew little about. I had no interest in becoming an armchair pseudo-expert on. I had no interest in learning how to read the technical literature specific to fluoride. I'd already done enough reading of the academic research on nutrition. I knew that it takes real work and plenty of time to be able to read a body of academic literature critically. When you first jump in, you are flailing around whether you know it or not. I budgeted 8 hours for my research. I wanted to minimize bias and be reasonably sure that I had a 90% chance of reaching a 'correct' opinion.

Applied Learned Epistemic Helplessness

This is what I looked at in descending order of how much weight I assigned to what I found.

1. I did a search on the Cochrane Collaboration database.

2. I did searches on the top scientific and medical journals. Science, Nature, JAMA, Lancet, NEJM. (just those 5)

3. I refused to look at single studies. I confined myself to literature reviews, consensus reports, and meta-analysis.

4. I did searches on the top science magazine sites. National Geographic, Discover, Scientific American, maybe one or two others.

5. I did searches on venerable journalistic enterprises with reputations to protect and trained science writers, skilled editors, and fact-checking departments. My list was something along the lines of the New York Times, the Wall Street Journal, the LA Times, Harper's, The Atlantic, The New Yorker, The National Review, maybe one or two more.

6. Finally, since there was some controversy about corporate malfeasance and possible parallels to the way the tobacco industry twisted outcomes from behind the scenes I also included a few venerable muckraking operations, but I was just as conservative and stuck with those I believe do fact checking and whose reputations would be hurt by getting the story wrong. Mother Jones, The Nation, In These Times.

The first three required the least art and judgment on my part. As you go down the list it becomes more subjective in terms of judging the credibility of the sources, but I was also giving them less weight. Or I would have if anything came up, but nothing did. There were not even any articles reporting on any controversy. It was like a hot knife through butter.

On fluoride at least it was clear that there was no controversy outside of crankdom. I was left confident that I was voting correctly based on the best evidence.

Over the course of the campaign, I did end up engaging with the oddball study here and there, as well as detailed critiques of the papers that circulated among anti-fluoride activists. In many cases, I could spot the flaws, but in other cases, they were convincing in the way Scott Alexander describes the back and forth over Immanuel Velikovsky’s work. I simply didn't give them any weight in working out my opinion.

After I'd done my research lashed to the mast –– I watched a two hour (plus) "debate about fluoridation of Phoenix municipal water supply between Dr. Paul Connett, a toxicologist and author of "The Case Against Fluoride" and Dr. Howard Farran, a dentist who'd won awards for his efforts in fluoridating the City of Phoenix. To be honest, if that had been the first thing I'd seen, I might have started off anti-fluoride, with attendant anchor effects. Connett, with his stentorian British accent, comes across as more authoritative than Farran who is arguing for the scientific consensus. Connett has a better command of the literature. He steps in with helpful reminders and annotations for Farran as Farran struggles to recall which specific paper supports the point he is trying to make or refute.

Trouble in paradise

This approach is not without its problems. We rarely are confronted with an issue in a simple Yes or No format. It's not often we come across contentious issues without any strong inclinations either way as to what we'd prefer to believe.

Reasonable people can disagree about which sources to commit to as highly credible. Some people would choose badly, and if you are already convinced that mainstream sources are fatally flawed, then this approach is of little value. Complicating things further even top scientific journals publish junk science from time to time –– it was the august Lancet that published Andrew Wakefield's paper which became ground zero for contemporary vaccine denial. On issues that are important to me, and deep inside my wheelhouse, it's easier for me to see where a titan of journalism like The New York Times is often just gobsmackingly bad at their job when it comes to coverage of agriculture.

Learned epistemic helplessness is not a perfect answer to the question of What to Believe in a world where we don't have the time, resources or mental capacity to become experts on everything we want to hold an opinion on. But done right it gets the job done much more often than not –– and much more often than blundering around out of your depth on Google Scholar.

* I say ironically, because while Taubes was a rigorous and incisive thinker a decade ago when he published Good Calories Bad Calories –– challenging a lot of the flabby, lazy thinking of the conventional wisdom of the time –– today he relies far too much on anecdote and thought experiments to dismiss evidence that challenges the insulin hypothesis he's made his reputation bringing into the mainstream debate. His work has begun to take on some unfortunate aspects of The Crank.

The Agromodernist Moment is a project of Food and Farm Discussion Lab. If you'd like to support this column and the other work we do, consider a monthly donation via Patreon or a one-time donation via Paypal.

Comments