Neutrinos are ubiquitous. We are constantly traversed by millions of them, as they come from three independent sources in large numbers, traveling to us at the speed of light. First of all, they are produced in gigantic numbers in the core of stars, during the fusion processes that release the energy necessary to keep them from collapsing onto themselves. We observe neutrinos from our Sun and from other astrophysical sources as they travel to Earth and produce subnuclear scattering reactions in very large detectors buried underground. Second, they are produced when the protons and light nuclei that bombard our atmosphere from the cosmos interact with the nitrogen and oxygen atoms in the upper atmosphere. Third, they come from the center of our planet, where heavy nuclei undergo fission reactions.

In addition to the above natural sources, we can produce neutrinos by colliding energetic protons with nuclei in stationary targets: these are called "accelerator neutrinos". And we can produce large numbers of lower-energy neutrinos from nuclear reactors, although in that case we cannot control the direction of the resulting flux. Although solar and atmospheric neutrinos were the ones which allowed the oscillation phenomenon to be first evidenced, the two "artificial" sources have become more and more important in neutrino research in the course of the past decades.

The standard picture and beyond

The standard picture of neutrinos in the Standard Model has it that there are three species of these particles, respectively labeled "electron neutrino", "muon neutrino", and "tauon neutrino". They have tiny masses, so tiny in fact that it has not been possible to measure them. One big mystery concerns the hierarchy of their masses, as the ordering of their values has huge implication in particle physics and cosmology. But there are other, more nagging issues that keep neutrino physicists puzzled. These have to do with a few "anomalies" that have been observed in several experimental studies.

The anomalies come from different measurements, but they all challenge the standard explanation of neutrino oscillations in terms of the best-fit value on pairs of parameters that determine each oscillation reaction: a "mixing angle" and a mass difference. A large number of theoretical works have tried, in the course of the past 20 years, to interpret these as signs of new physics beyond the standard paradigm.

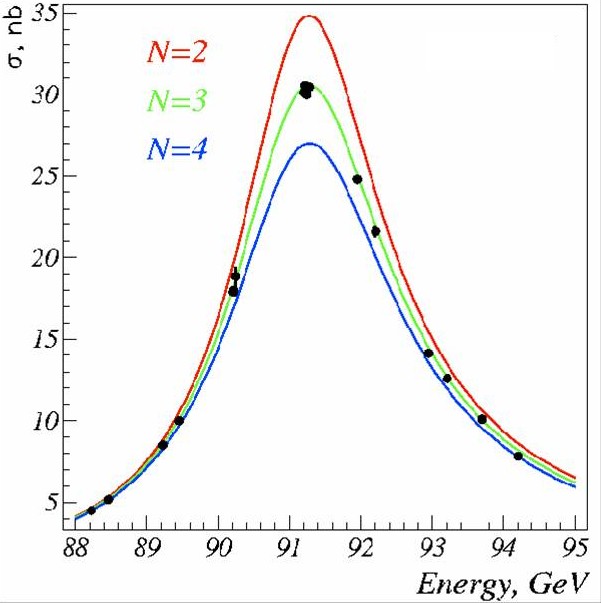

The most notable explanation of the observed anomalies involves the existence of additional neutrino species into which standard neutrinos could fluctuate. These "sterile" neutrinos would escape the draconian cut that the detailed measurements of Z boson decays performed by the LEP collider experiments have set in the nineties (thou shalt have three light neutrinos), as one could hypothesize that sterile neutrinos do not have any business with Z bosons.

[Below: the lineshape of the Z boson -i.e. its production rate as a function of collision energy-, determined in precision electron-positron reactions at LEP, conclusively determines the existence of three, and only three, light neutrinos (i.e. ones with mass lower than half the Z boson mass, MZ/2=45.6 GeV). The three curves show predictions for the production rate in the case of 2, 3, and 4 neutrino species.]

The LSND anomaly

An experiment at Los Alamos created a large number of low-energy muon antineutrinos from the production of positive pions. While in the chain of reactions

muon neutrinos and antineutrinos are produced in equal numbers, if the positive muons are stopped before they decay the resulting antineutrino populate the energy spectrum between 20 and 60 MeV where the contamination from their antiparticles (muon neutrinos) can be kept smaller than 1 per mille. LSND could thus search for the appearance of electron antineutrinos in a large tank containing 167 tons of liquid scintillator, where the inverse beta decay reaction

could take place with very small backgrounds. The reaction could be flagged by finding the prompt signal of positron annihilation (a 52 MeV photon) followed by a second 2.2 MeV photon from neutron capture. In five years of data taking, from 1993 to 1998, LSND collected a signal of 90+-23 electron antineutrino appearance events, a result that was in tension with the null result by a very similar experiment at Karsruhe (KARMEN), and which was later recognized to be flying in the face of the standard oscillation solution found by other experiments.

In the meantime, a second class of anomalies was found in reactor-based experiments looking at the disappearance of electron neutrinos from the reactor flux, as well as in the measurement of solar neutrino in Gallium experiments. A common interpretation of these results could reside in the existence of a neutrino at a mass scale of 1 eV or above, which could drive the high-mass-difference oscillation solution favoured by LSND and escape the results of other experiments. This neutrino should be sterile, in order to not affect the above mentioned LEP constraints.

MiniBoone

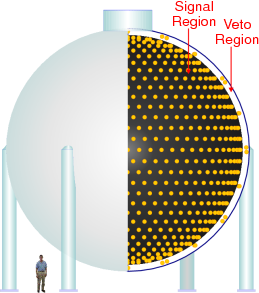

An intense program of studies of accelerator neutrinos is underway at Fermilab. In particular, the MiniBoone experiment receives neutrinos from the Booster Neutrino Beam (BNB), created when 8-GeV protons interact with a Beryllium target. The geometry of magnets downstream of the target can select almost pure beams of muon neutrinos or antineutrinos, that later impinge in MiniBoone, a 40-foot diameter sphere filled with 818 tons of mineral oil carefully watched by 1520 8" photomultiplier tubes.

(Below, a schematic of the MiniBoone detector. Each yellow dot is a photomultiplier tube watching the liquid volume. An external layer "vetoes" events caused by charged particles entering the vessel.)

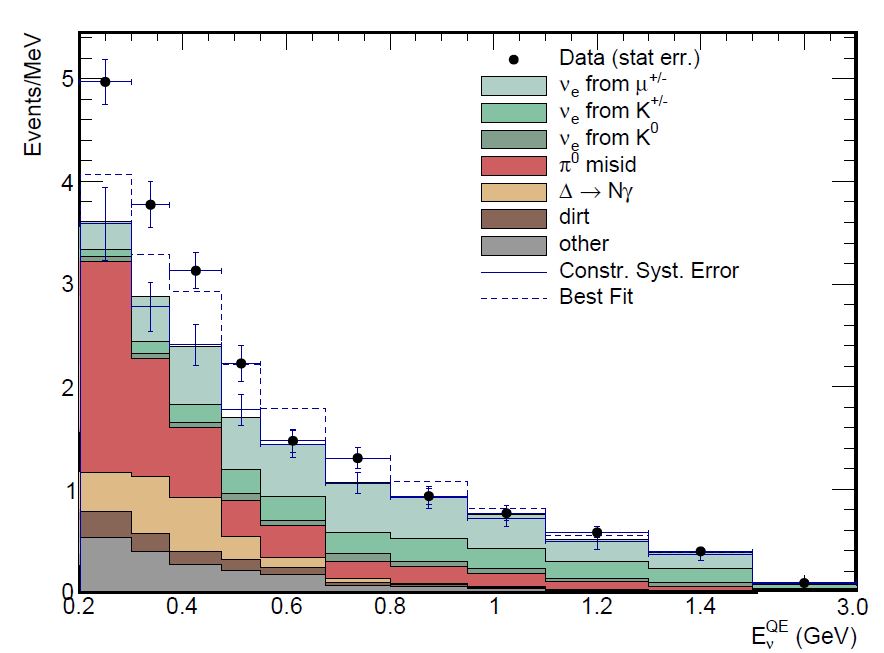

MiniBoone has received a large flux of muon neutrinos and antineutrinos from BNB: 12x10^20 "protons on target" were delivered by the facility. The analysis of these data can be summarized in the graph below, which shows the energy distribution of the candidate electron neutrino appearance events, compared to predicted backgrounds. A significant excess, quantified at the level of 4.8 standard deviations from the background-only hypothesis, is evident at low energy.

The observed excess is in agreement with the LSND result, and provides a good fit to a "large delta-m" solution in a two-neutrino oscillation framework. The article combines the two results to quote a global significance exceeding six standard deviations - in particle physics terms, the equivalent of "ample observation-level significance".

I think it is out of question that the result is systematic in nature: it is either due to the systematic effect from the presence of a true new signal, or to a systematic effect of other nature connected to the estimate of known or unknown backgrounds. The question is therefore how much one can trust the experimental understanding of the latter. In particular, the background due to neutral-current production of neutral pions seems a source worth discussing in excruciating detail, as its predicted energy shape is very similar to that of the excess: bump it up by 50%-60% and the anomaly is gone.

In the article, little space is devoted to the crucial discussion of neutral current backgrounds: they write that

The gamma background from neutral-current (NC) pizero production and Delta -> N gamma radiative decay [29] are constrained by the associated large two-gamma sample (mainly from Delta production) observed in the MiniBooNE data, where the new pizero data agrees well with the published data [30].

This paragraph IMHO raises more questions than it dissipates... But I believe this article is too long already to delve into these technical details - I am not a true expert in these matters so my comments would probably be deceiving or plain fallacious. But I remain sceptical of the interpretation of this experimental result in terms of a new physics such as sterile neutrinos or a second oscillation solution at large delta-m. Why ?

In my courses of Statistics for data analysis I explain my students that it is rare to mis-evaluate statistical uncertainties in a way that makes new signals arise from nothing. For those, a type-I error rate of 3x10^-7 (which corresponds to a 5-sigma significance for a one-tailed test) is a safe threshold. But when the veridicity of a result is dominated by the knowledge of systematic uncertainties, things become quite different.

Remember: N-sigmas are a shortcut

The example I make to my students is the following. Imagine you have a 5-sigma effect and your measurement uncertainties are dominated by a single, large source of systematic uncertainty. Imagine that you have underestimated the systematic effect by a factor of two. Your 5-sigmas end up being only 2.5 sigma in reality. I can almost see you stretching your arms out and hear you arguing, upon the discovery of the mistake: "Well, I mistook that effect by a factor of two - ok, so, do you want to kill me?"

Yes I do! Yes I do, as you went around speaking of a five-sigma effect, when you had in fact only 2.5. It is not a mistake of a factor of two: in p-value terms, 2.5 sigma correspond to a p-value of 0.006, and not of 0.0000003 as five-sigma would imply - so in terms of p-value, which is what measures directly the frequency with which one gets data as surprising or more, given the null hypothesis, you erred by a factor of 20,000 ! Not two!!! If you are my Ph.D. student, I claim I have the right to kill you using some horribly painful method.

Remember, "N sigma" are a shortcut for a p-value which would be cumbersome to quote when it has many decimals. We say "5 sigma" because it is quicker than "zero comma zero zero zero zero zero zero three probability", but the two things are in a one-to-one mathematical map - a p-value of 0.0017 for instance corresponds to three sigma, and so on.

As for MiniBoone, I hope they will release more detail of their background estimates and related techniques. The simple collecting more data and making the result stronger from a statistical standpoint in these cases does not help convince your peer: as I said, this is systematic in nature, so the problem has to be attacked by other means.

A good example of a similar situation is the long-standing "DAMA-LIBRA" solution for light dark matter, found by that experiment in nuclear recoils in cesium iodide buried in the Gran Sasso mine in central Italy. The collaboration has shown 5-sigma, then 7-sigma, and now 9-sigma effects - as if we could count sigmas that way. Above 5-sigma, in my opinion, it makes no sense to speak of these numbers. For we would be saying we control the tails of our systematic uncertainties with precisions that are utterly unbelievable... Whether DAMA's recoils are dark matter related remains another mystery. I hope I'll live to see the solution of these riddles.

----

Tommaso Dorigo is an experimental particle physicist who works for the INFN at the University of Padova, and collaborates with the CMS experiment at the CERN LHC. He coordinates the European network AMVA4NewPhysics as well as research in accelerator-based physics for INFN-Padova, and is an editor of the journal Reviews in Physics. In 2016 Dorigo published the book “Anomaly! Collider physics and the quest for new phenomena at Fermilab”. You can get a copy of the book on Amazon.

Comments