I'm not talking about romantic dates with seniors though, I don't have many tips there I'm afraid, although good personal hygiene and being a good listener will probably count in your favour.

I'm talking mainly about the dating of rocks. This isn't as romantic as the other kind of dating, but with both kinds there's a chance you'll get your rocks off. At first, geologists didn't really have much to go on when it came to dating rocks, other than direct visual observation of the rocks themselves. Previously, it was widely thought that rocks had simply "always been there", since the beginning of time.

The early geologists noted that the content of rocks was, most of the time, different as you dug deeper down. They also saw that new rock was typically laid down on top of existing rock (e.g. When volcanic lava spews out over existing rock and then solidifies on top of it, or when sediments are deposited on riverbeds). This gave them the general idea that "deeper" rocks must usually (but not always) be older than rocks nearer the surface. This concept was later formalised by Nicholas Steno in the 17th century as "The Law of Superposition".

Through patient observation and measurement, they determined that there were distinct "layers" of rock, and the same pattern of layers was present in many places around the world, allowing for a little regional variation. This was the basis of lithologic stratigraphy – the analysis of rock types and their positioning within layered strata, and how they came to be deposited in such a way. The geologists began to recognise that the rock strata represented successive time periods. By looking at the position of rocks within the stratigraphic sequence, comparing their physical characteristics (colour, hardness, proportions of various minerals and crystal formations etc) and noting the types of fossils they contained, they were able to compare the ages of rocks from one place with rocks from another, and get a good idea of the ages of rocks relative to one another, wherever in the world they were found.

This also allowed them to estimate the relative age of rocks that had been knocked out of the conventional layered pattern (perhaps as a result of an Earthquake or by erosion and breaking off), by matching the characteristics (colour, hardness, mineral content etc) against nearby rock formations and "piecing together" the history.

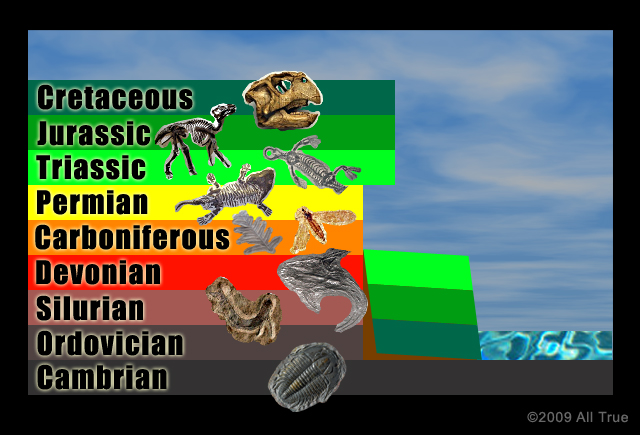

Based on all these findings, the beginnings of a Geologic Time Scale started to emerge, and time-periods were given names. Some of them are shown on the diagram below. While the position of a rock or fossil on the time scale (and thus its relative age compared to rocks and fossils from the other periods) could be confidently determined, the actual absolute ages could not.

The geologists could now sort rocks and fossils into date order, but they had no reliable way of knowing the actual age of any particular rock or fossil!

They could tell you that "Specimen A is older than B, and B is older than C" but they couldn't put an actual date on any of them, at least not with anything like reliable accuracy.

In the diagram above, they'd have been able to work out that the block on the right wasn't the same age as the rock immediately next to it. By looking at its qualities (mineral content, hardness etc) and the fossils it contained, they could confidently deduce that it contained rock deposited in the Cretaceous, Jurassic and Triassic periods and so was much younger than the Devonian, Silurian and Ordovician rock beside it. They could also deduce, by the ordering of the layers, that it had flipped upside down as it fell.

They could, perhaps, have made a fairly confident bet that water had eroded the older rocks at the base of the cliff, leaving an overhang of Cretaceous, Jurassic and Triassic rock that then snapped, and turned upside down as it fell to the ground. They could also have deduced that the water level must have been higher in the past.

But despite all that detective work giving them the history of events that lead to the scene depicted in the diagram, they couldn't be at all accurate on how many years ago any of the specific events occurred.

The reason for this was that the only ways they could estimate the absolute time-scales involved were all based on very variable phenomena – erosion, weathering, sedimentation and lithification. For example, they could measure the effects of erosion on contemporary rocks, and use that combined with the contemporary rates of sedimentation and lithification, to estimate the age of much older rocks, but to do that they had no choice but to assume a steady rate of erosion over time, which was not an assumption supported by the evidence – even from measuring the erosion of contemporary rocks they could see that erosion did not always occur at a stead rate (and the same variability was found to occur when they measured the other phenomena as well).

Based on these variable phenomena, Arthur Holmes, in his 1913 book "The Age Of The Earth", estimated Earth to be “at least 1.6 billion years old”. He was right, but because of the variable nature of the factors he could measure, extrapolating them back in time gave a huge margin of error, so the best estimate he could arrive at was just a minimum age.

To get a good estimate of the actual age, you need to establish not just a minimum age, but also a maximum, and these need to be reasonably close together.Let's look at an example on a more human time-scale. If you only know someone's age as being "at least 5" that doesn't tell you a great deal about them. It let's rule out a few possible ages, but they could be a six-year old, or they could be ninety-six. If you know someone's age as being "between 5 and 55" that's a little better – we have an upper and lower boundary, so we know with confidence that their age falls in a certain range. However, this still leaves a wide range of possible ages, the largest being 11 times bigger than the smallest!

To get a useful understanding of something's age the upper and lower bounds need to be reasonably close together so that the window of possible ages isn't too wide to be useful.

But how can we establish an accurate age-window for something that could be millions, or even billions, of years old?

That is the tricky part, and for a long while we simply had no way to date rocks with any real accuracy, although geologists could confidently state most rocks had to be extremely old – thousands or millions of years old at the very least.

What was needed was a reliable “clock”, a clock that was started when the rock formed and which continued to “tick” steadily from that time all the way to the present. That sounds like a tall order, but eventually it was discovered that natural processes had created exactly the kind of clock we needed to reliably date ancient rocks.

The “clock” is actually the decay of radioactive isotopes within the rock. Experimentation has shown us that radioactive decay occurs at predictable rates, which remain steady over the entire process of decay, and aren't changed by environmental factors. There are a few exceptions to this with certain isotopes, but those aren't the ones used for radiometric dating and in any case the changes are only in the region of 1-2%.

Each particular radioactive isotope has its own specific “half life”, which is the length of time it takes for half of the remaining radioactive isotope to have decayed into the decay product. So if you had 1,000 units of the radioactive isotope and the half-life was 10 minutes, then you'd only have 500 units of it left 10 minutes later (and 500 units of the decay product*). A further 10 minutes later you'd only have 250 units of it left (and 750 units of the decay product*), and so on. *although if the decay product is also radioactive, that will begin to decay into its own decay product as well, but still at predictable rates so the dating can still be done, it just becomes more complicated to calculate as you need to take into account the ratios of all the decay products down the chain. Obviously, something with a half-life of 10 minutes isn't going to be much use when it comes to dating rocks that are thousands, millions or billions of years old – the quantity of the original radioactive isotope will have been halving every 10 minutes that whole time, so the amount left would be too tiny to measure (even if there were hundreds of tons of it when the rock formed – just think: if you were given $1,000,000 but half of it was taken away every 10 minutes, after just 4 hours you'd have only 6 cents left!).

Fortunately there are lots of different radioactive isotopes present in rocks, and they each have different half-lives, so we can pick whichever one of those present gives us the most accurate result (they will all give compatible results, but some will have such large margins of error as to be virtually useless). To get an accurate measurement, the half life has to be such that there will still be enough of the original radioactive isotope left (I.e not yet decayed) for us to measure accurately, and enough of the decay products produced so that we can measure their amount accurately too. If there isn't sufficient quantity of original isotope AND decay product for us to measure accurately, we know we need to try again with a different isotope. The more of the materials there are, the less significant the margin of error inherent in our measurement devices becomes (if you can measure to the nearest 10 particles for example, but your sample contains 44 particles, you would measure the number as 40, wrong by 10%. But if you had 4,004 particles, you'd measure the number as 4,000, wrong by only 0.1%). The more accurately we can measure the amount of the original isotope remaining and the amount of the decay product present, the more accurately we can measure the ratio. Once we know the ratio of original isotope to the decay product, we can calculate the age by working out how many “half lives” have occurred (it won't always be an integer) and multiplying this by the length of the half-life. The actual equation used is D = D0 + N(eλt − 1) , but I won't go into the mathematics here because logarithms hurt my brain. Returning to the simplified example where we started with 1,000 units of the original isotope, and a half life of 10 minutes... if we measured our sample and found it had 125 units of the original isotope and 875 units of the decay product, we'd be able to calculate that it had halved 3 times, so three times the length of its half-life period must have passed since it was formed, which would be 30 minutes (in real-word examples it will almost never turn out to be an exact whole-number multiple of the half-life period like this).

The radioactive decay is the predictable, constant “tick” of the clock, but for us to be able to use that clock it needs to have been “zeroed” at the point the rock formed. Luckily that is exactly what happens. But how?

For each method of radiometric dating (two of the common ones for rocks are Potassium-Argon and Uranium-Lead, in each the first element decays into the second) there will be a specific closure temperature for each type of material they are in.

But maybe the rates of radioactive decay have changed – maybe they used to be a lot faster, which would make the rocks a lot younger than science says they are?

the date estimates we get from radiometric dating are compatible with the date estimates from other, independent, dating methods (such as dendrochronology – dating based on tree rings).This means that if we wanted to contend that rates of radioactive decay had changed, we'd also have to assume that the unrelated factors used in other dating methods (such as the formation of tree rings) had also changed, and to the same degree, otherwise the different dating methods would not all produce the compatible estimates that they do. We'd have a very large number of co-incidences to explain!

If physics had been so different in the past, we'd see evidence of this from astronomy, as looking into deep space is, quite literally, looking into the past. This is due to the time it takes for light from distant objects to reach us – when we look at an object 1,000 light years away, we are looking at it as it was 1,000 years ago! Astronomers have found no such evidence that might indicate the drastic changes to physical laws – things look as they'd be expected to look if the physical laws had remained constant (at least over the time-scales involved with the various layers of rock).

As we get more and more pieces of the puzzle, we start to find that they fit together, but only in certain combinations.

When there is only one way they can all fit together (e.g. When several independent dating methods all point to the same approximate age), we can say with a good degree of confidence that we've put them together correctly, and we can be confident in what we see. When we find certain pieces can't fit with certain others, we can deduce that at least one of them isn't right – it isn't a piece of the jigsaw we're putting together (which is the jigsaw that shows how the world works). When we look at the various forms of radiometric dating we find they will all give compatible results (that is, the age windows each form gives will overlap). When we compare radiometric dating with other, independent, forms of dating, we find the results each gives are compatible with each other. When we have several independent methods all homing in on the same age (albeit each one giving different margins of error), we can be confident that age is a reliable estimate.

Comments