This is the extended abstract of an article you cannot read anywhere, which ensures that one can later claim “nobody could have possibly foreseen as the relevant literature clearly shows …” (here more on why). The article clarifies the singularity concept’s terminology and criticizes the concept itself, finishing with global suicide as the only expected annomality:

Singularity: Nothing Unusual Except For Global Suicide

This article aims to present a rational rather than wishful perspective on the so called 'singularity hypothesis'. In the face of imminent super-human rationality on top of mere super-human intelligence, we avoid arguments based on human irrationality, for example we avoid to think in terms of "existential risks".

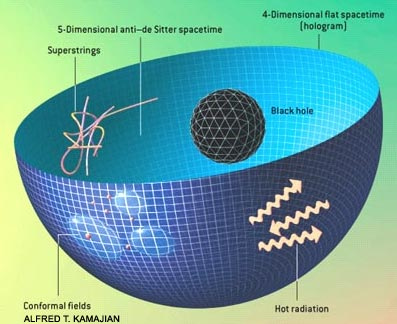

We start with a brief clarification of terminology: There are two meanings of “singularity” in the context of transhumanism. Both meanings fit closely to the mathematical physics analogy which the term derives from, namely the black hole as described by classical general relativity. Ironically, even the confusion around the term reflects the historical confusion between the singularity inside of a black hole and the event horizon around it.

With the “infinite singularity”, as with the curvature singularity in general relativity, something well defined and observable, like computing speed, becomes infinite. Artificial infinities, say due to re-defining parameters so that they go through a division by zero, does not count as a truely infinite singularity.

The “horizon singularity” on the other hand envisions a certain threshold, a point of no return after which, for example, any further development is somehow fundamentally more unpredictable than future usually is. Again, the analogy of what happens at physical horizons (which were discovered as coordinate singularities) fits: What falls in cannot come back out, and there is a further, profound form of uncertainty between observers that are separated by a horizon (see ‘black hole complementarity’). Infinities appear here also due to unsuitable coordinates.

In conclusion, the term “singularity” matches the recently in popularity gaining issues under that label quite well. In fact, it even serves to distinguish them via the infinite-versus-horizon distinction.

The main contribution is divided into three parts:

1) A criticism of both “singularity” concepts as descriptions for future evolutionary events: The “infinite singularity” is to be rejected in much the same way as modern physics did it: one rejects singularities not because they could be disproved but because they are operationally verification transcendent (bad science). We also reject the “horizon singularity”. Artificial intelligence (AI) is possible (humans are nature's nanotech robots with AI) and much less restricted than biologically evolved intelligence. And yes, evolution goes through periods of exponential growth via self-referential, recursive ‘evolution of evolution’ type processes. This very insight into that information technology evolves according to what is known from evolution generally is usually taken as an argument in favor of the singularity. However, AI, just like anything, indeed “evolves according to what is known from evolution generally”, especially important here the co-evolution of environment, and one should therefore precisely not expect any magic new form of unpredictability or extremely unusual behaviors, at least not on these grounds.

We reject the argument by Kurzweil and others, including Chalmers, that declare some singularity inevitable based on far extrapolated growth of ill-defined measures without taking the co-evolving environment into account. Similar arguments could equally apply to robots building robots better than humans do, which they already do for many years now. They evolve along with the rest of the total environment. So will intelligence, and there is incidentally nothing that we can do about this but philosophizing.

In summary: We start with a criticism of the singularity concept as such, how it is supposed to come about, whether it is a useful concept applicable to future development as it can be reasonably expected to occur given what we know about general evolution of systems in co-evolving environments.

2) We will further argue that although technological advances will go beyond our imagination, the main hope of transhumanists, namely a kind of heaven on earth in the computer and uploading of persons into virtual reality, involve as yet not considered problems. We discuss: A) A general criticism of obscure expectations of ‘higher consciousness’. Minsky’s “Society of Mind” and D. Dennett’s descriptions will help us here. B) Nick Bostrom’s 'simulation hypothesis' and similar stand and fall along with the assumed statistical ensembles, which are ill-defined and amount to counting souls in a naive realistic misinterpretation of the multiverse/many-world interpretation. C) Brain emulation and gradual uploading are perfectly possible, but desparately maximizing presence in an ill-defined statistical ensemble that is anyway given in its modal totality is not what advanced individuals are going to be interested in.

The holographic universe: We are a ‘simulation’ anyway!

The holographic universe: We are a ‘simulation’ anyway!

The main conclusion of this section will be that transhumanist dreams root in remnant religiousness (hope of salvation) that is expected to be absent from intellectually advanced systems. Systems with advanced rationality, be they enhanced humanoids or cyberspace internal structures, understand the here discussed issues sufficiently to no longer have any motivation for continuing some individualist, soul-like personality afraid of death. They will not be hung up on personal identity philosophy. This conclusion leads to the last section.

3) A singularity signifies something unusual in the context of usual, general evolution. Usual Darwinian/neo-Lamarckian processes have no sharp lines separating species for example. A singularity implies that evolution behaves substrate dependent; it would require that memes behave differently from genes in a fundamental way. There may be something like that, but it has escaped serious consideration because of human fears.

Evolution may have an inherent endpoint to it that occurs much sooner than expected from astrophysical constraints, which explains the Fermi paradox of there being no traces of advanced alien civilizations. This endpoint is indeed brought on by strong intelligence, however, transhuman rational intelligence. Supposing utilitarian decisions aiming to minimize suffering while maximizing stability, a rational switch-off (‘Global Suicide’) may be the only and natural option for an advanced, sovereign global structure with sufficient rationality to avoid death by catastrophe.

Comments