Co-design

First of all, what is co-design, and why should all experimental scientists have that idea at the center of their field of view when they think about new experiments (which, alas, at present they do not)? Co-design is the simultaneous consideration of all aspects of the functioning of an apparatus in the definition of its working parameters, geometry, layout, building materials, and related technology. Negligence of consideration of a part of the system at design stage is liable to result in a misalignment with the other parts when the system is put in operation. So in this sense co-design is a necessary condition to optimality of an instrument.

For example, if you are building a digital thermometer and you pay extreme care to devise a sensitive sensor that may capture very small temperature variations with an accuracy of 0.1 degrees, and then couple the information-acquiring device to an electronic readout that digitizes the temperature in 0.5 degrees intervals, you clearly fail. The digital thermometer will return readings with a 0.5 degree precision, throwing away the potential higher performance that a better digitization could produce. You failed to consider the whole task together, and a strongly suboptimal system is the result.

The above is a quite dumb example, but maybe it is at the right level of complexity for the audience I wish to target (not you, but rather the community of my peers), as it is surprisingly hard to convince some of my colleagues of the importance of holistic optimization in the design of the experiments we build for our fundamental science investigations. I do hope nobody takes offence at this rather abrasive statement, but this is my perception after trying to push this topic against a mainstream of denial.

Embracing the deep learning revolution: work in progress

The reason of the above is that experimental physicists have specialized in the past few decades into two almost disjunct subclasses: those who have become experts in data analysis and in the extraction of results from our instruments, and those who have become masters in the subtleties of designing and building awesome particle detectors and other precise scientific instruments we use for our research. When the deep learning revolution happened, 12 years ago, only the former sub-community jumped on the train; the majority of the detector-building community was much less interested in joining the crowd of enthusiasts in machine learning, as machine learning was at that time thought to be completely irrelevant to the design of an excellent instrument -and in a sense, at the very beginning it probably was.

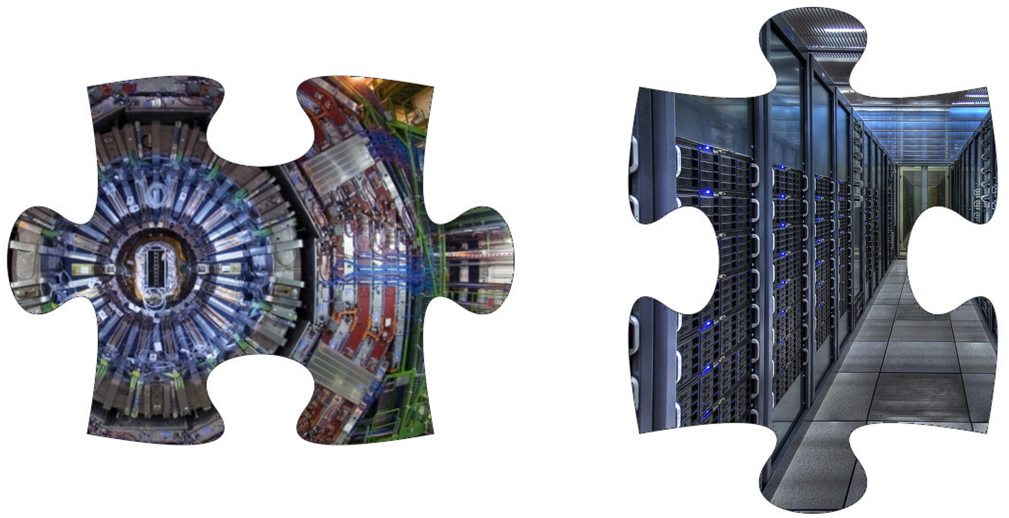

The problem is that, well, that time has gone. Deep learning today is powerful enough to have become an elephant in the room - it is impossible to ignore its potential use in the business of experiment design. Our detectors are among the most complex instruments humanity has ever built (if we think e.g. at the ATLAS and CMS experiments at the CERN LHC), but their sheer scale should not intimidate us any more.

Yes, the number of expert-driven design choices that were required to build CMS count in the tens of thousands, and most of them were great, experience-driven decisions. But humans cannot think in 10,000 dimensions, and this means that those choices are not optimal. They seek redundancy of the apparatus (as elementary particle interactions are ephemeral and we cannot go back to re-measure them a second time if we screw it up the first time), but redundancy is the exact opposite of optimality. They favor symmetrical, easy-to-build-and-understand layouts (e.g. in sampling calorimeters), but the physics of hadron showers in a calorimeter is not symmetric. They rely on well-tested paradigms for particle measurement (track charged particles first in a lightweight volume, where preserving the trajectory of particles without perturbing them in dense materials is paramount, and only later destroy both charged and neutral ones in a dense calorimeter), but these are clearly liable to enormous improvements (a dichotomic zero density / high density detector is obviously sub-optimal: it is a singular point in a rich parameter space of measurement solutions).

If we look outside our tiny garden of fundamental science research, what do we see? Well, ChatGPT4 and similar large language models today are trained by optimizing simultaneously some 10^12 parameters. Why are we hesitant in trying our hand with 10^4? As I said, it is a combination of conservativism of the physicists community, and of lack of training and/or interest in deep learning of the community that takes decisions on the building of apparatus. But things will change. Physicists (even those of the detector builders kind) are non dogmatic -they have this elasticity in their DNA, as any good scientist should- and they will recognize and embrace positive, groundbreaking revolutionary improvements when they see them. It takes time to produce excellent examples of how co-design should be carried out, though.

The fact is that in order to study the optimality of a particle detector or an instrument of similar complexity, we have a task of very high complexity in front of us. For before we can start training our optimization algorithm, we need to ensure that we can simulate all parts of the problem in a way that can be amenable to optimization techniques, such as gradient descent - the engine under the hood of searches for extrema of a utility function in high-dimensional spaces: that is task number one. On that topic (creating suitable surrogates of the non-differentiable parts of the data-generating mechanisms, as well as of the software reconstruction of the produced observable results of the physics reactions under study) I have written elsewhere, and I will leave it aside here. Then, task number two is getting to agree on what is the objective of our optimization.

The objective function

Suppose I ask you to come up with the optimal recipe for packing spheres in a box: this is a very simple stipulation, and one amenable to brute force solutions as well as mathematical study (in fact it is a complex problem but one which is solvable exactly). The simplicity of the task stems from it being a single task, and from it being perfectly well defined. Suppose, instead, I ask you to build a collider to find new physics. How can you come up with specifications for the machine that optimize the odds of it finding new physics? This is a very ill-posed question, as we do not know where new physics lays, what mass have the elementary particles that could be discoverable, or what kind of phenomena they would be involved in. Trying to define a objective function (a utility) for that task is indeed quite problematic.

At a much more affordable level of fuzziness lays the question of what kind of detector we should build in order to explore deeper at the high-energy frontier, maybe with a higher-energy version of the Large Hadron Collider. We have a very clear idea of what works well as far as is concerned the measuring with high precision the fundamental parameters of the standard model: this comes from two decades of studies of high-energy proton-proton collisions with the ATLAS and CMS experiments. So we may try to put together a list of measurements that we consider important probes of the inner workings of the standard model, and try to prioritize them.

I am sure that if we took a dozen of our top physicists in ATLAS or CMS and asked each of them to come up with a recipe for the relative value of measuring with higher precision some fundamental parameters accessible to high-energy proton-proton collisions, expressed in terms of weights whose sum is 1.0, they would come back with widely varied lists of numbers. Why am I sure of that? Because I saw that with my own eyes! I saw that by watching the definition of the parameters of the CDF trigger system.

The CDF trigger

I grew up as a scientist in CDF, a glorious experiment that studied proton-antiproton collisions provided by the Tevatron collider. CDF took data from 1985 to 2011, and along the way it discovered the top quark, made countless exceptional measurements in B physics, electroweak physics, and QCD, and eventually almost challenged the LHC to the 2012 discovery of the Higgs boson (it could have been a formidable competitor if some silicon detector upgrades had been funded in the early 2000, but that unfortunately did not happen).

In the early 1990ies the focus of CDF was the discovery of the last quark, the top. But many of the 600 scientists that comprised the collaboration were not specifically in it because of a crave to discover the top: they were more interested in QCD, in B physics, and in other areas of studies of subnuclear physics. That does not mean they were not fully enthusiastic when the top quark was eventually discovered, of course: but every researcher had his or her own scale of values for what concerned the priorities of the experiment.

The place where this varied scale of values surfaced fully into view was only one: the Wednesday afternoon CDF trigger meetings. The experiment observed collisions taking place in its center at a rate of 350 kHz, and could afford to store to tape all the detector readouts only for a mere 50 Hz. The rest of that precious bounty of particle collisions had to be dumped. How to decide what to keep and what to throw away? A trigger menu had to be devised, which would set minimum energy or momentum threshold on interesting reconstructed objects (electrons, muons, jets, missing transverse energy). The sum of the accept rate of the various trigger conditions had to not exceed 50 Hz or so, lest the data acquisition system would drown in its own dirty water, and generate dead time - effectively, the detector would then be blind during a fraction of the time. For imagine that an accept signal were given to 80 events per second: the first 50 would get written, and the others would get dropped, being overrun by new ones coming in. The dropped events could be the most valuable to store, but their relative value would never get to be appraised. Dead time was a democratic, but stupid way of coping with a higher rate of proton-antiproton collisions than what could be stored.

The matter was made worse by the fact that the Tevatron collider during its operation always strived -as nobody would object, as this maximized the discovery potential of the experiments!-, to achieve the highest density of protons and antiprotons in its beams. This caused many proton-antiproton collisions to take place during the same bunch crossing, every 350 thousandth of a second. Every other day, a new run would start, and its conditions would be different. Perhaps the trigger menu that had worked well the other day would generate a huge dead time today. The trigger meeting was thus the place where CDF physicists took informed decisions on which thresholds to raise from which trigger condition, in order to keep the dead time below a physiological level of about 10%.

To make an example, there were high-pt electron and muon triggers. These were the conditions that ensured that the experiment would collect events that could be the result of the production of a pair of top quarks: their full functionality had to be preserved, so their thresholds were never touched. But this was a relatively easy decision, as the accept rate of the resulting data streams was relatively small, perhaps a few hertz. But then there were jet triggers: in principle, jets are the commonest thing one can see in a proton-antiproton collision, so one could accept to collect only a small fraction of events with high-energy jets. However, many CDF scientists would have loved to have as much jet events data as possible. Perhaps 20 hertz of jet events could be stored? Well, that could be a sound decision, but then there were scientists who wanted to study B physics, which relied on collecting low-momentum electrons and muons. Those, too, were relatively frequent. Should CDF collect more low-momentum leptons or more high-energy jets?

Beware, above I have been simplifying the matter immensely, as the trigger menu in CDF included over a hundred different sets of requirements on the energy and type of objects measured by the online reconstruction. To make the situation more complex, there were actually three separate trigger levels, each level accepting data that would be fed in the next one. But I think you got the idea: deciding what kind of datasets to collect, and how many events to acquire from each, was equivalent to deciding what was the overall goal of the experiment. In a way, the trigger of a hadron collider experiment IS the definition of its utility function.

Taking the matter from another perspective

Eventually, CDF did discover the top quark, as it did produce groundbreaking measurement in a number of other measurements and searches. An a posteriori way to judge on the relative merit of the various physics results that the experiment produced, which includes in its definition a measure of the impact that those measurements had in the science CDF advanced, is the number of citations of the publications that the experiment published based on those measurements.

If we look at the 20 most cited CDF publications in the years 1992-2000, we get the following picture:

- the observation of top quark production, and related papers (total 4): 6084 citations

- J/psi and psi(2s) production, plus related papers (total 4): 1661 citations

- Bc observation (1998), plus a related paper (total 2): 819 citations

- measurement of total p-antip cross section (total 2 papers): 867 citations

- double parton scattering (2 papers): 668 citations

- CDF technical design report: 433 citations

- W boson mass measurement (2 papers): 551 citations

- single diffraction and associated papers (3 papers): 856 citations

Leaving aside the detector-related paper (after observing that the citations it got give us a scale of the relative importance of detector developments per se), we are left with the task of aggregating the seven classes of papers together based on the triggers that produced the respective analyses. So the top and W mass papers were mainly produced with high-momentum lepton triggers, and amounted to 6635 citations; the J/psi and b physics papers, mainly produced through the study of low-pt lepton triggers, brought CDF researchers 2480 citations; and the QCD-based measurements, brought in by study of jet triggers, 2391 citations. By normalizing these numbers, we finally get that the "relative value" of the high-pt lepton triggers, low-pt lepton triggers, and jet triggers in CDF during Run 1 (the period of data taking which produced the publications above discussed) is respectively of 57.7%, 21.6%, and 20.8%.

And what were the overall thresholds of the three kinds of triggers in CDF during Run 1? Alas, that is not something easy to determine. However, the high-pt lepton triggers took much less than 57% of the bandwidth because of their rarity; their momentum thresholds could have been lowered to increase the rate, but the discovery potential of the resulting increased acceptance would be minimal, as most of the reconstructable top quark events would not require looser thresholds to be acquired. Instead, I find much more interesting to observe how the QCD triggers, which were always the ones CDF managers turned to when deadtime had to be reduced, produced a whooping 21% of the high-impact scientific results of CDF in Run 1. Of course, such a datum can only be considered post-mortem, but if anything it teaches us that to be good experimentalists, and take sound decisions on the goal of one's experiment, is very tough!

The utility of future experiments

So how should the problem of defining a global utility function be approached for a future experiment, one for which we want to get the help of a deep-learning-powered co-design

modeling effort, to explore the high-dimensional space of design choices in a full, continuous way? I think there is no substitute to getting the stakeholders in a room, and throw away the key until they converge on a set of multipliers - weight that should reflect the relative importance of the different kinds of studies that the experimental data can eventually enable. Opinions will differ wildly, and their barycenter will probably not be an excellent proxy for the scientific impact of those choices as measured ex post; but on the other hand, it will be a guiding lamppost to studies of holistic optimization, which I am convinced we cannot ignore any further.

It is interesting to note, at the end of this long post, that whenever I talk about co-design to my colleagues - at seminars, conferences, or other workshops - the question of the utility of large experiments is a big hitter. E.g., I was asked the question for the umpteenth time yesterday, when I discussed in a presentation to my colleagues in Padova the need of having a working group on co-design within the update of the european strategy for particle physics, which is in progress as we speak. Mind you, I am never unhappy to answer it, as I think it is a very relevant question! In fact, I almost did not let Martino Margoni finish it as he was formulating it -I knew where he was going from the third word he said (sorry Martino!).

I do hope that these few lines, but even more the article I will write on this matter, will help scientists focus on these important topic in the future!

Comments