Mobile phones are in the hands of 80 percent of Americans so manufacturers are scrambling to find new ways to keep people buying the next model.

Being able to use gestures in the space around the phone rather than needing a screen may be the next big thing. Some smartphones have incorporated 3-D gesture sensing based on cameras, for example, but cameras consume significant battery power and require a clear view of the user's hands.

University of Washington engineers have developed a new form of low-power wireless sensing technology that could soon contribute to this growing field by letting users "train" their smartphones to recognize and respond to specific hand gestures near the phone.

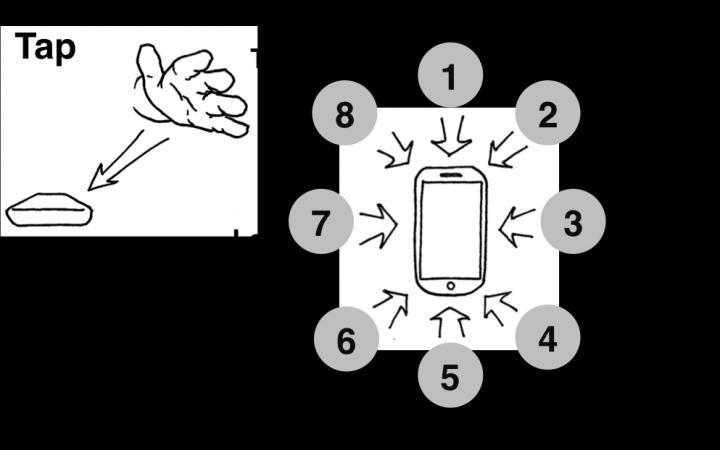

The SideSwipe system uses the phone's wireless transmissions to sense nearby gestures. Credit: University of Washington

The technology uses the phone's wireless transmissions to sense nearby gestures, so it works when a device is out of sight in a pocket or bag and could easily be built into future smartphones and tablets.

"Today's smartphones have many different sensors built in, ranging from cameras to accelerometers and gyroscopes that can track the motion of the phone itself," said Matt Reynolds, associate professors of electrical engineering and of computer science and engineering. "We have developed a new type of sensor that uses the reflection of the phone's own wireless transmissions to sense nearby gestures, enabling users to interact with their phones even when they are not holding the phone, looking at the display or touching the screen."

Team members will present their project, called SideSwipe, and a related paper Oct. 8 at the Association for Computing Machinery's Symposium on User Interface Software and Technology in Honolulu.

When a person makes a call or an app exchanges data with the Internet, a phone transmits radio signals on a 2G, 3G or 4G cellular network to communicate with a cellular base station. When a user's hand moves through space near the phone, the user's body reflects some of the transmitted signal back toward the phone.

The new system uses multiple small antennas to capture the changes in the reflected signal and classify the changes to detect the type of gesture performed. In this way, tapping, hovering and sliding gestures could correspond to various commands for the phone, such as silencing a ring, changing which song is playing or muting the speakerphone. Because the phone's wireless transmissions pass easily through the fabric of clothing or a handbag, the system works even when the phone is stowed away.

"This approach allows us to make the entire space around the phone an interaction space, going beyond a typical touchscreen interface," Patel said. "You can interact with the phone without even seeing the display by using gestures in the 3-D space around the phone."

An image showing how users can interact with a smartphone using a tapping gesture. Credit: University of Washington

A group of 10 study participants tested the technology by performing 14 different hand gestures – including hovering, sliding and tapping – in various positions around a smartphone. Each time, the phone was calibrated by learning a user's hand movements, then trained itself to respond. The team found the smartphone recognized gestures with about 87 percent accuracy.

There are other gesture-based technologies, such as "AllSee" and "WiSee" recently developed at the UW, but researchers say there are important advantages to the new approach.

"SideSwipe's directional antenna approach makes interaction with the phone completely self-contained, because you're not depending on anything in the environment other than the phone's own transmissions," Reynolds said. "Because the SideSwipe sensor is based only on low-power receivers and relatively simple signal processing compared with video from a camera, we expect SideSwipe would have a minimal impact on battery life."

The team has filed patents on the technology and will continue developing SideSwipe, integrating the hardware and making a "plug and play" device that could be built into smartphones, said Chen Zhao, project lead and a UW doctoral student in electrical engineering.

Comments