I recently read a book by Martin Rees, "On the future". I found it an agile small book packed full with wisdom and interesting considerations on what's in the plate for humanity in the coming decades, centuries, millennia, billions of years. And I agree with much of what he wrote in it, finding also coincidental views on topics I had built my own judgement independently in the past.

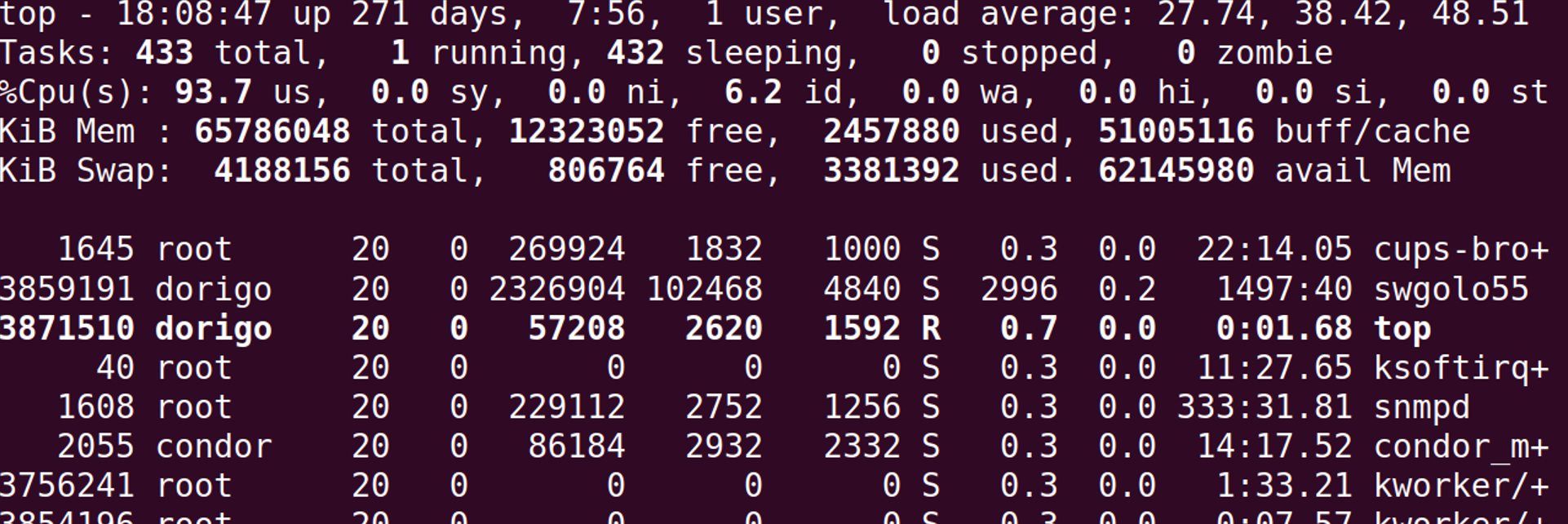

What is multithreading? It is the use of multiple processors to perform tasks in parallel by a single computer program. I have known this simple fact for over thirty years, but funnily enough I never explored it in practice. The reason is fundamentally that I am a physicist, not a computer scientist, and as a physicist I tend to stick with a known skillset to solve my problems, and to invest time in more physics knowledge than software wizardry. You might well say I am not a good programmer altogether, although that would secretly cause me pain. I would answer that while it is certainly true that my programs are ugly and hard to read, they do what they are supposed to do, as proven by a certain record of scientific publications.

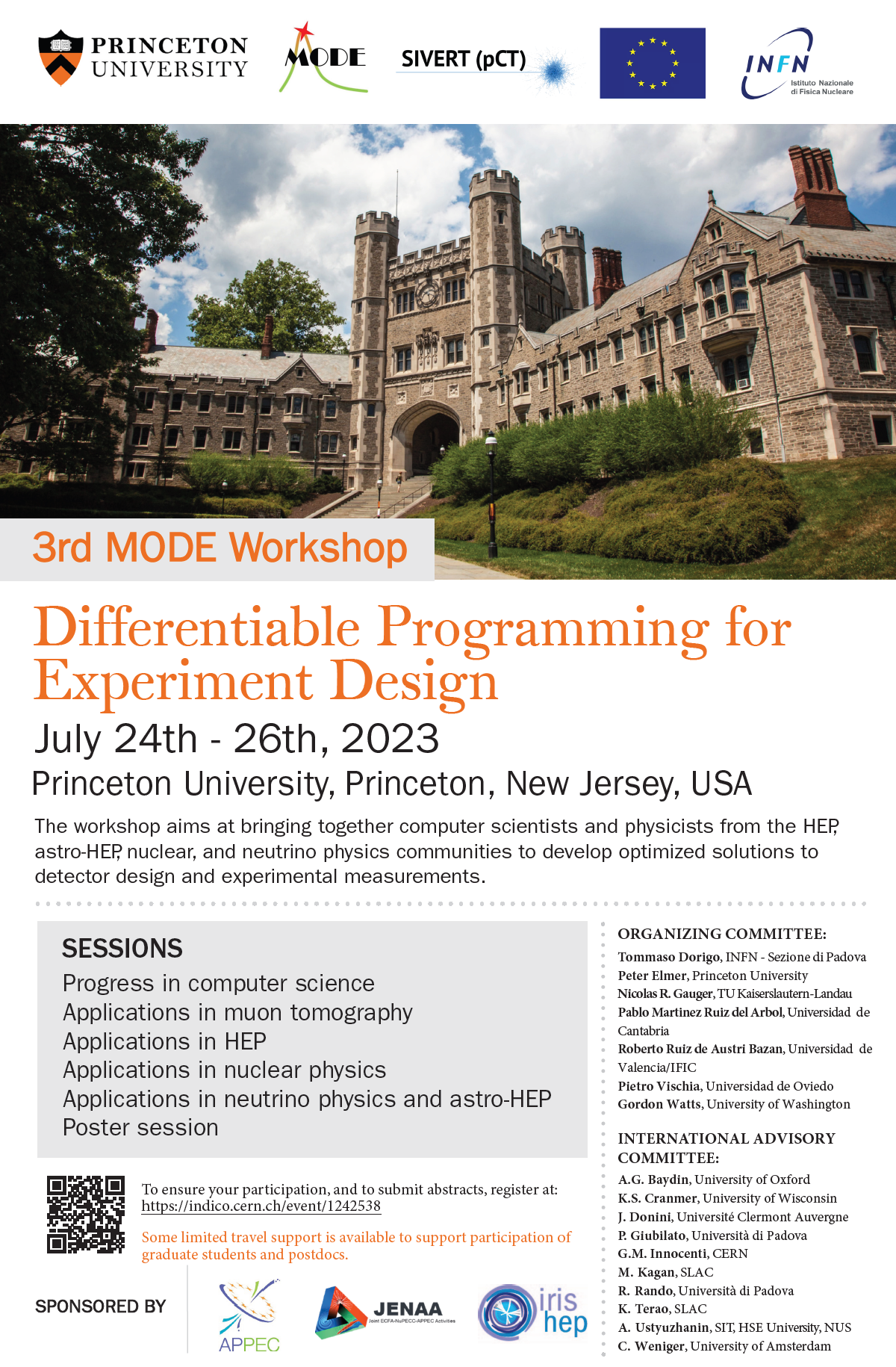

Wait a minute - why is an article about automatic differentiation labeled under the "Physics" category? Well, I will explain that in a minute. First of all, let me explain what automatic differentiation is.

Computing derivatives of functions is a rather error-prone job. Maybe it is me, but if you give me a complex function where the dependence on a variable is distributed in several sub-functions, I am very likely to find N different results if I do it N times. Yes, I am 57 years old, and I should be handling other things and leave these calculations to younger lads, I agree.

Although researchers in fundamental science have a tendency to "stick to what works" and avoid disruptive innovations until they are shown to be well-tested and robust, the recent advances in computer science leading to the diffusion of deep neural networks, ultimately stemming from the large increases in performance of computers of the past few decades (Moore's law), cannot be ignored. And they haven't - the 2012 discovery of the Higgs boson, for instance, heavily used machine learning techniques to improve the sensitivity of the acquired particle signals in the ATLAS and CMS detectors.

Summer is supposedly a period where people take it a tad easier, spend some vacation time away from anything that is work-related, and "tune out" of the deadlines and rythms of daily work activities that dominate their existence at other times of the year.

One of the things that keeps me busy these days is the organization of a collective publication by a number of experts in artificial intelligence and top researchers in all areas of scientific investigation. I will tell you more of that project at another time, but today I wish to share with you the first draft of a short introduction I wrote for it. I am confident that it will withstand a number of revisions and additions, so by the time we will eventually publish our work, the text will be no doubt quite different from what you get to read here, which makes me comfortable about pre-publishing it.

K0 Regeneration

K0 Regeneration And The USERN Prize Winners For 2024 Are....

And The USERN Prize Winners For 2024 Are.... Flying Drones With Particle Detectors

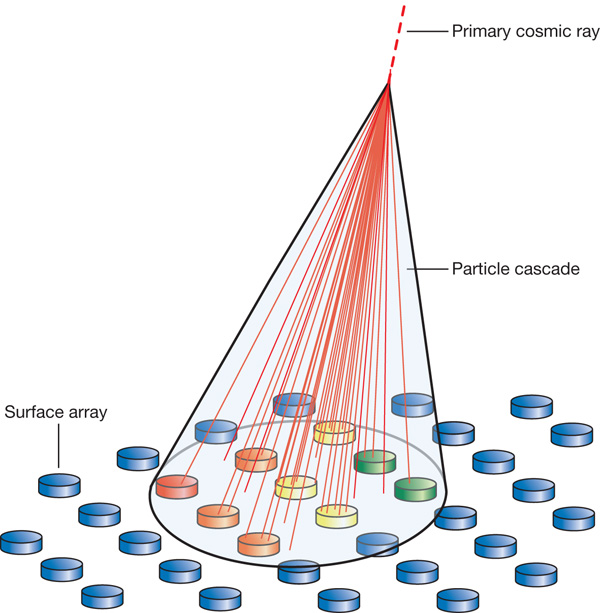

Flying Drones With Particle Detectors Some Notes On The Utility Function Of Fundamental Science Experiments

Some Notes On The Utility Function Of Fundamental Science Experiments